The last full week of September 2025 I attended the FOCUS conference in Seattle put on by the Association for Advancing Automation (A3). This conference was all about the latest advancements in AI enabled vision systems for use in the manufacturing environment. The key areas of interest were part identification for picking and the identification of manufacturing defects.

There was lots of discussion of general AI trends and potential benefits such as the ability to meaningfully aggregate multiple data sources. Those are macro trends for AI, and well covered in technical new outlets. I would like to share with you what I found to be the my top three most important learnings about the use of AI enabled vision systems in the manufacturing environment in the fall of 2025.

1) The solution to the AI training data problem is … AI

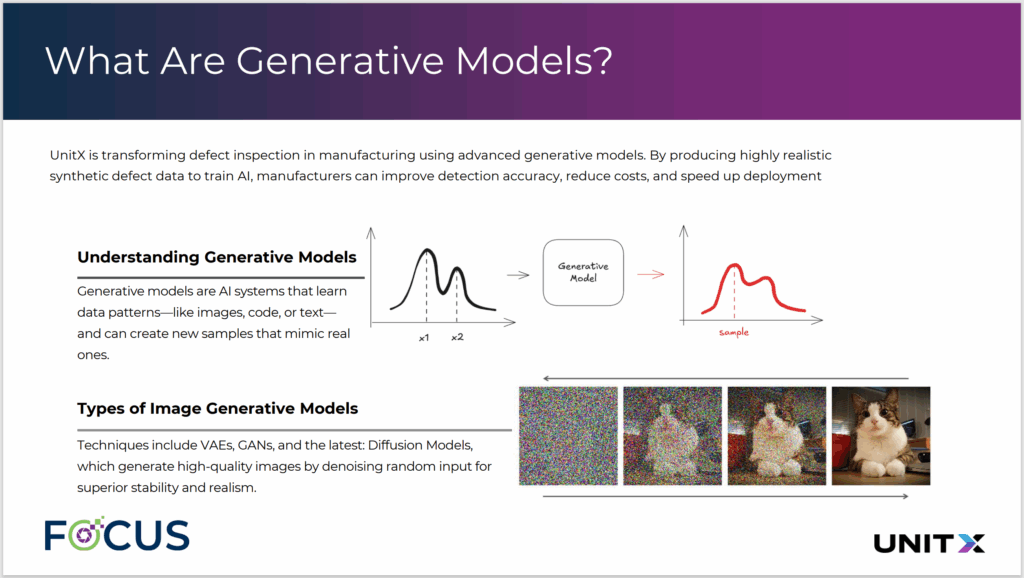

It is well known that one of the greatest challenges for AI is collecting a sufficient amount of labeled training data, that is input data with the answer attached to it. For instance, if we’re training an AI system to recognize pictures of cats, we need a lot of pictures of cats and other animals, and we need to tell the system which animal is in which photos.

For common things like cats, there are a lot of photos available on the open internet. For items like your custom designed part? Not so much. Until recently, generation of sufficient training images has been a blocker for many applications. Common solutions to this problem were the use of pre-trained models, transfer learning, and basic image augmentation. Those solutions alone fall short.

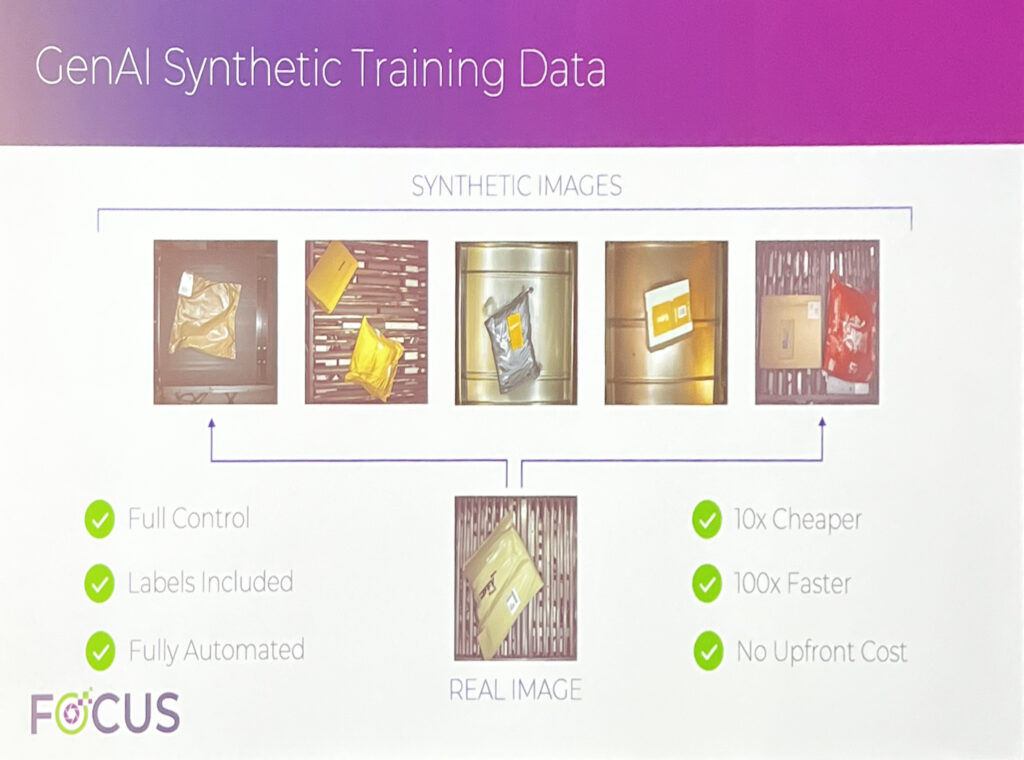

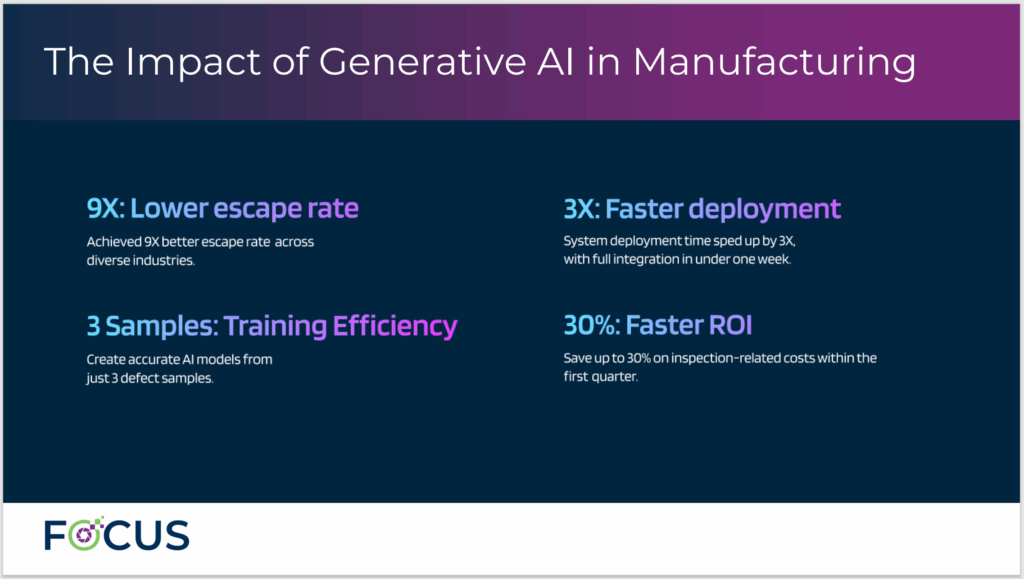

The advent of generative AI has changed the situation. Kevin Wang from UnitX and Pedro Pachuca from Advex shared their companies’ solutions.

Generative AI can now create synthetic images of defects sufficient to train AI image recognition systems to find and classify defects at accuracy rates sufficient for the manufacturing environment.

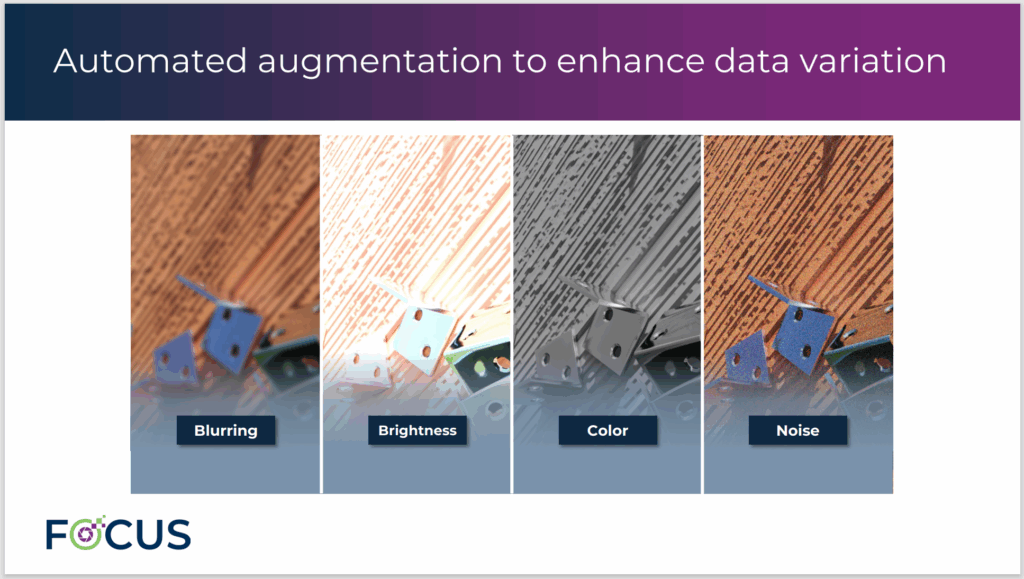

Once trained, these systems can be updated for product changes like color more easily than traditional vision systems. Sina Afrooze from Apera AI showed us how his system can generate variants to increase robustness.

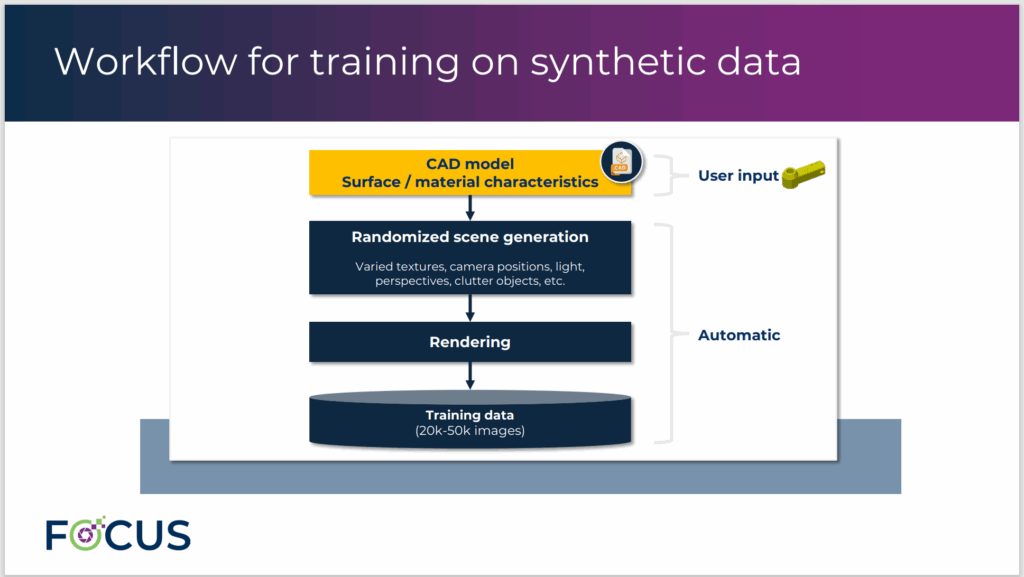

A related area where Apera and MVTech are working is in the conversion of 3D part CAD into images that can be used for bin picking. Heiko Eisel of MVTec shared the outline of how their system works:

2) AI is a Tool, not a Magical Solution

Several speakers referenced a recent MIT study that found that 95% of AI pilots are unsuccessful. Common problems include ill defined problems to be solved by AI, and solutions purchased without sufficient support for implementation.

Eric Jewett of Loopr.ai, Eric Malis of Lincode Labs and Srivatsav Nambi of Elementary all described their companies’ systems which deliver “no-code” software to simplify the integration of this technology for those that do not have an extensive AI background. In fact, several of the companies mentioned are selling full technology stacks including the hardware for cameras, lighting and AI inference compute and the software to run it all.

Another important aspect of a successful implementation is the use of AI in conjunction with traditional solutions. If a traditional image processing technique is capable of solving your problem, it is usually cheaper, easier and faster to implement that solution. Many of the speakers advocated using traditional algorithms where possible for basic image analysis, then using AI for more advance analysis. You can then focus your spend on AI processing where it can yield the most value.

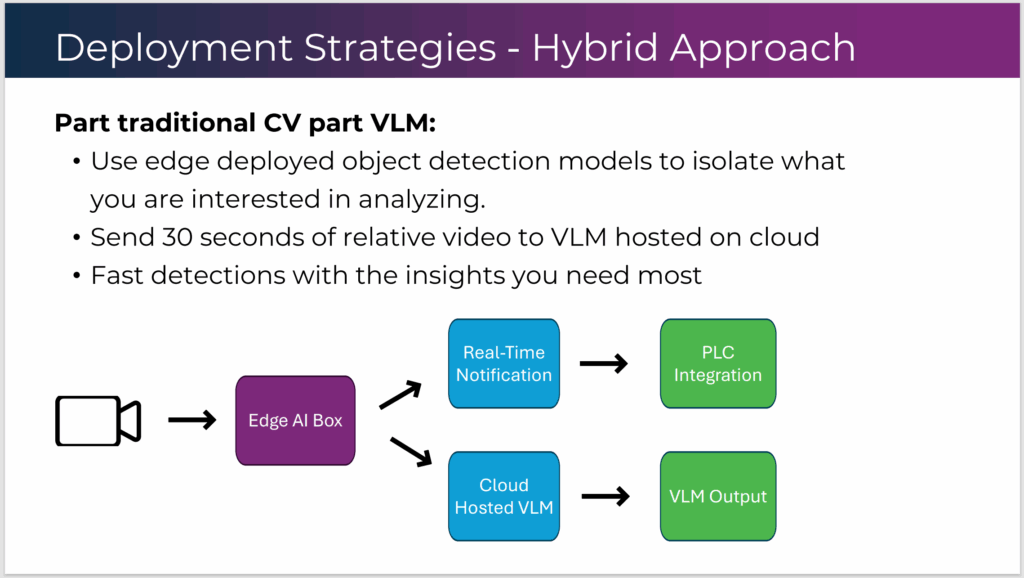

Brandon Neustadter of Datature shared that one of the most exciting areas in AI based image processing, Visual Language Models (VLM), currently require 10x to 50x the compute power of traditional image processing algorithms, and can rarely run fast enough to meet the speed requirements for go/no-go production systems. He advocated using a hybrid approach as shown in his slide below.

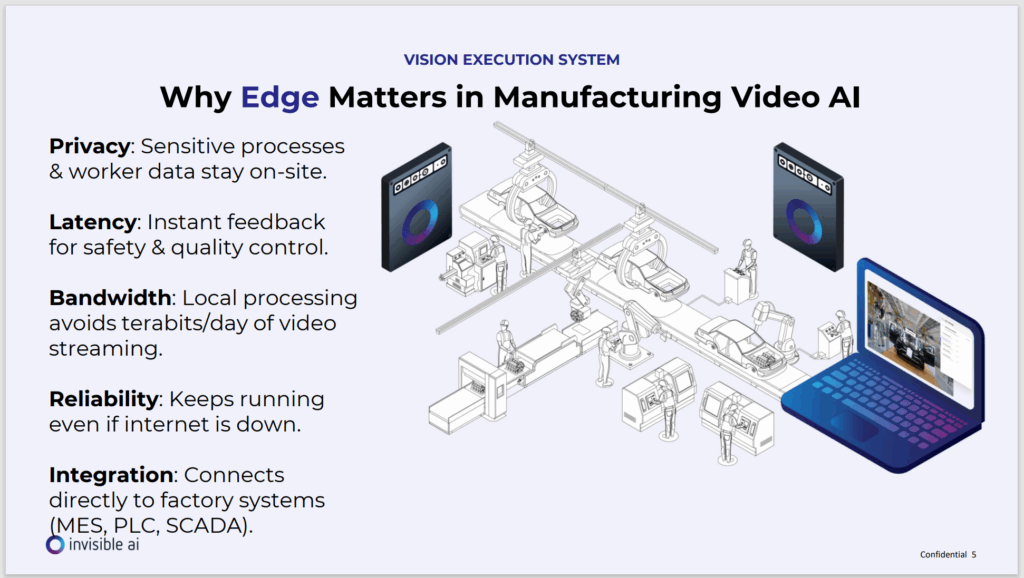

In his talk “Vision and AI at the Edge”, Eric Danziger of Invisible AI shared discussed some important points for consideration when determining your AI vision system architecture.

3) Effective use of AI Follows Tried and True Product Development Principles

Simplexity follows a proven product development process to balance development risk and cost, and applies engineering fundamentals to all development. It was striking to me how many of the learnings shared at the conference are common practice in product development engineering. This is not surprising given that AI has quickly gone from universities’ computer science research into hardware manufacturing. Crossing from one knowledge domain to another is always challenging, and it takes time for the best practices from each to be shared with the other.

Many of the talks pointed out aspects of AI implementations that should sound familiar to those well versed in product development engineering:

Iterative Design

In his opening keynote presentation, Ben Schreiner, Head of AI and Modern Data Strategy at Amazon Web Services pointed out that Amazon Prime Day could not happen without automation, much of that enabled by AI. In particular, he pointed out that Prime Day is the result of 20 years of iterative improvement. Don’t expect to have a successful, customer ready product on your first shot.

Digital Twins

Digital Twins were much discussed. Digital Twins are nothing more than simulation models, with a particular focus on 3D geometry and motion, typically for use with vision systems. Engineers have been using simulation models since the advent of the computer. With AI enabling the camera to become a general-purpose sensor, the AI field has provided new classes of sensors for use in industrial control systems.

This is enabling the application of decades old control system techniques to be applied to a wide range of new problems. Eric Danzig of Invisible AI challenged the audience to “think beyond inspection, think adaptive automation”. Using AI to identify trends and predict near term impacts is Model Predictive Control, a technique widely used in the process automation industry for years. It will be interesting to see how these two fields converge on new, integrated solutions.

Software Development Operations (DevOps)

Elementary’s Srivatsav Nambi discussed how his company is applying well known software development architecture and operations to delivering AI solutions. His five points were:

- Use modular data pipelines: ingestion of images, image preprocessing, AI inference and results monitoring stages should be isolated from each other with processed data passed between via a well-defined API. This allows the team to update different portions of their pipeline without impacting the code from other stages.

- Prefer configurations over retraining. Retraining an AI system is expensive. If there are portions of the algorithm that can be parameterized, capture those parameters in configurations that are cheap and quick to change.

- Use abstraction layers to decouple software modules. Srivatsav’s team uses Open Neural Network Exchange (ONNX) software to abstract the AI algorithm and compute hardware interface from the rest of the data pipeline. They also use common hardware APIs to abstract specific camera interfaces to swap cameras, which does not require a major system overhaul.

- As you deploy your AI software to more and more devices, configuration control and upgrade orchestration become more and more critical. Don’t reinvent the wheel, use of standard DevOps techniques.

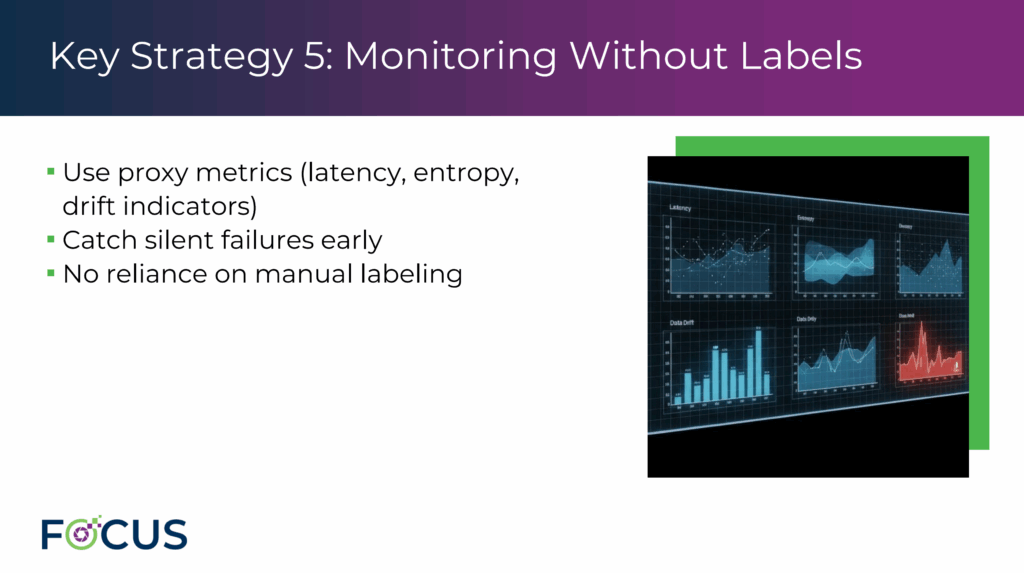

- Monitor Without Labels. This one is the most AI specific of his guidelines, but it deals with generalization of data where possible. His slide on this topic is shown below.

Design Failure Mode and Effects Analysis

Paul Campbell of Cheerful.ai pointed out the thing that every engineer knows, and that management never wants to discuss: things will go wrong. A system, AI or otherwise, that is of business value is going to be complex, and most likely something nobody in your company has ever done before. Having problems with your design is not a failure, it is expected. Not planning for failures and generating mitigation plans is a failure of management of the design and development process. Think about what can go wrong, how it will affect your customers and your business, and create mitigation plans.

Conclusion

A3 did a great job of pulling together industry leaders to discuss the state of the art in AI Vision Systems for Manufacturing. The majority of the presentations and panel were well planned and informative. There are quite a few companies delivering significant value with their inspection and/or bin pick vision systems. If your production system could use vision to solve these types of problems, I encourage you to contact Simplexity so we can discussion the unique challenges of your system and the solutions that offer the most potential for overcoming those challenges.